Beyond Snippets: Where Are AI's Software Masterpieces?

Stay pragmatic: neither fear nor follow the vibe coding hype

Year 2025, surrounded by AI models that can supposedly do anything.

Zuckerberg, Amodei, Siemiatkowski and many others are jumping on the near-future prediction bandwagon about AI replacing humans, with software creation especially included.

Redis, Postgres, Vercel, Supabase, Go, Zig, Ghostty, go find the software, tool, tech company you like - these are all remarkable creations that many of us use daily, directly or indirectly. Some we love, some we tolerate, but each represents not just code, but entire ecosystems built through human collaboration: communication, negotiation, documentation, and years of iteration and refinement.

Prototype

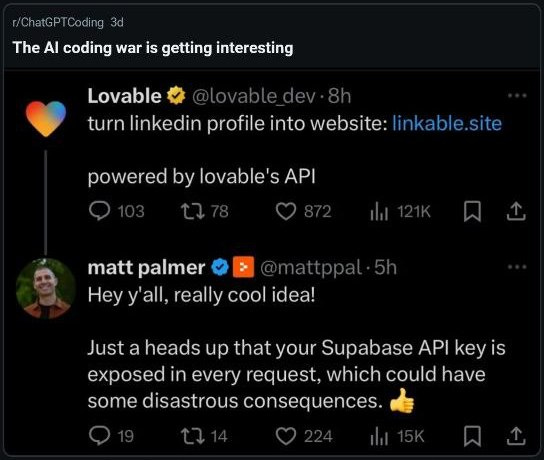

I've tested many of these low/no-code AI instruments (or used as such) like Lovable, Bolt and (somehow) Cursor across different disposable projects in recent months. To be clear: I'm not discussing the intentional use of AI as collaborative tools. These excel as programming partners for discussing ideas and pair programming, particularly in domains where I already have expertise (which helps avoid hallucinations), or for generating boilerplate code and templates.

The real problems emerge when we venture into what Andrej Karpathy has dubbed vibe-coding, whatever that actually means beyond surrendering control after a few iterations.

On LinkedIn I've witnessed product managers, hobbyists, and non-technical folks having a grand old time cranking out their fantastic MVPs, right until they notice their free credits have evaporated or their credit cards maxed out. Suddenly they're faced with mountains of generated garbage they're technically incapable of fixing themselves. And the machine can't fix it either.

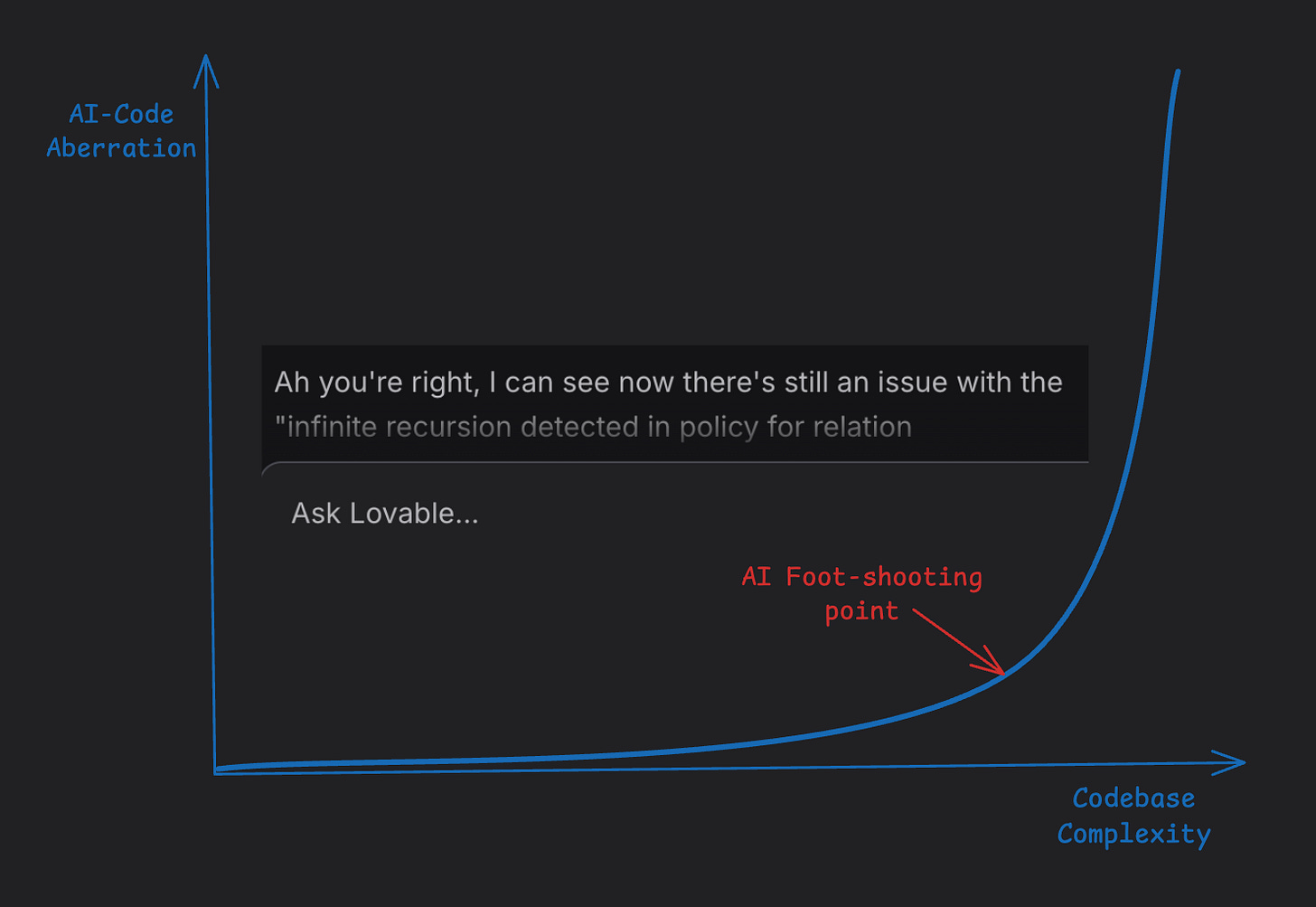

They've reached what I call the AI Foot-Shooting Point. That special moment when the LLM has created so many stacked layers of absolutely useless, interdependent code that it's impossible for even the most advanced models to navigate the spaghettification of the digital mess they've created.

Don't get me wrong, it's fantastic that this new way of prototyping exists. I'm actually happy if software engineers at their jobs stop doing this themselves and getting stressed during that first phase of experimentation, leaving the fast iteration bootstrap process to someone else instead. But don’t forget that, especially at this stage, this stuff is absolute garbage under the hood, it is what it is: a prototype. That's why your iterations stop working at some point and you need to call in some experts to rewrite everything for you. Don't forget to pay them enough to clean up that mess.

Case Study

I’ve tested this myself in a few projects, I’ll describe here one as an example. I set out to build something deliberately simple: a fair-compensation salary matching SaaS service, using a very common stack (NextJS/Supabase/Vercel/Postgres/Stripe) - technologies with abundant resources and documentation online.

I used the most powerful models available. For the first 10-15 iterations, everything went remarkably smoothly with minimal interventions. Even here, though, I noticed the interventions I did make would have been exceptionally difficult, if not impossible, for a non-technical person without spending hours figuring out what to do. Tasks like migrating a database schema, managing data types and formats, and other "simple" problems that would baffle a novice.

Then things started breaking down on seemingly trivial tasks. For example, I wanted a form field to display only when the user role was "Company" and not "Candidate". The request was straightforward. In the simplest case, without touching any backend logic, it could have just hidden the textarea on the frontend based on the role stored in the session.

But no. The same AI capable of state-of-the-art complex Math struggled on such a simple task, and with each attempted fix, something else broke. Eventually, I gave up and investigated it myself. What I discovered was a development horror story: tons of dead code left for no apparent reason. Even the best models fine-tuned specifically for complex coding displayed a massive bias and competence toward addition rather than deletion, cleanup, and refactoring. Why?

Refactor Inability: Motorcycle Gloves for Playing Guitar

This additive bias likely stems from how code appears in training data. Most publicly available code represents the "state of the art" for that particular code snippet or repository, not the iterative process that led there. The models may occasionally train on diffs containing exemplar refactors, but they miss the context of why those refactors happened.

Real software development isn't linear. Sometimes you duplicate code to ship a feature, then apply DRY principles later. Sometimes you complicate things to meet a deadline, then apply KISS to simplify when you have breathing room. Sometimes it's August, everyone's on vacation, and you finally have time for that massive refactor where you apply all your knowledge, intentions, and creativity to sculpt the codebase like an artist.

AI simply can't do this (yet?), and it's painfully obvious. The models excel at leetcode-style problems and can provide fantastic snippets for bounded, well-defined tasks. In those moments, you can genuinely feel them channeling the best of human knowledge and creativity.

But as complexity grows, they deteriorate dramatically, caught in a positive feedback loop where they compound their own mistakes without recognition. They lack hesitation or shame in layering garbage solutions to appease you while you rush to build yet another IMDb & AI-powered Movies recommendation tool that nobody asked for (and that probably Netflix already does better as part of their paid service).

So What?

AI tools could excel at scaffolding projects and generating boilerplate, but struggle with production essentials like proper complexity management, error handling, security, and optimization when things are done at a slightly larger scale than MVP or single feature without human intervention. Despite making developers more productive, the gap between hype and reality remains stark.

The industry suggests AI will replace engineers imminently, but at the moment it can't reliably implement basic features without breaking others. Production software requires extensive testing, edge case handling, and architectural decisions that demand domain expertise AI lacks.

Until we see significant, infrastructurally stable software built primarily by AI, I remain skeptical of the most ambitious claims.

So here we are in 2025, surrounded by evangelists preaching the gospel of our imminent obsolescence while their billion-dollar companies still employ armies of human developers to build anything that actually works.

The revolution is perpetually one parameter update away, I'll keep watching the hype train barrel forward on VC fuel while the rest of us quietly fix the AI-generated spaghetti code left behind.