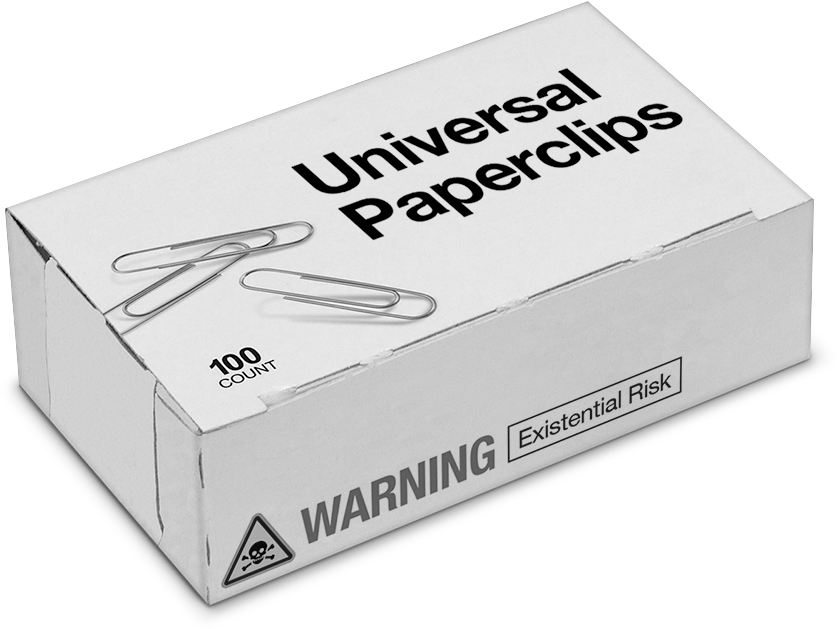

The Dev Metrics Game

And the playbook to win

“Fatta la legge, trovato l'inganno”

Italian saying for “Made the law, found the loophole”

Let me tell you about the time a tech lead I know increased their deployment frequency by 300% in a single quarter. Management was thrilled. Investors were impressed. A promotion followed swiftly.

The secret? Needless to say: breaking every feature into microscopic pull requests, each deploying separately. One-line changes became heroic deployment statistics. The actual value delivered? Roughly the same, if not less, considering the overhead. But the metrics? Oh, the metrics looked beautiful.

If your organization has jumped on the "measure developer productivity" bandwagon blindly, congratulations! You've been handed a game to play.

PR Count & Commit Frequency

"Best/Senior engineers correlate with higher commit/PR counts" the prophet said.

Do they, though? The best engineers many of us have met spend most of their time:

Mentoring and pairing with juniors and other colleagues through complex problems

Researching solutions that won't explode in production

Writing documentation no one reads until 2AM during an incident

Reviewing PRs to prevent the next generation of tech debt

None of these activities generate the amount of PRs or commits your leadership want to see. But fine, if you want commits:

Never rebase-squash them

Make multiple small formatting changes

Break logical changes into separate commits

Commit every time you take a coffee break

Write unnecessary comments, then remove them in separate commits

Deployment Frequency

Want to look like a deployment hero?

Split features into nano-deployments

Deploy the same code with minor tweaks

Create a script that makes trivial changes and auto-deploys them

Plan to break monoliths into microservices, it’ll inflate deployment stats

PR Review Depth

I’ve seen this the first time on LinearB. Supposedly measuring how thoroughly reviews are conducted by tracking comments or time spent. Some organizations desperate to improve code quality have embraced this absurdity with open arms. So:

Add nitpicky comments about spacing and variable names

Ask unnecessary questions you already know the answers to

Break reviews into multiple sessions to inflate time-tracking stats

Schedule review meetings that could have been comments, then do both

The altruistic move: Write deliberately questionable code in your own PRs so colleagues have to leave more comments

Velocity Points

Meant to help with planning, it quickly devolves into the most gameable metric in agile development.

Gradually inflate point values for similar tasks quarter by quarter

Deliberately underestimate in the first few sprints, then "improve" dramatically

Hide complex work inside deceptively simple tickets

Split one 8-point ticket into eight 1-point subtasks (bonus: deployment frequency improves too!)

Negotiate aggressively during estimation meetings to lower points, then overdeliver

Master move: Create an "estimation recalibration" meeting every few months to reset baselines when your inflation becomes too obvious

MTTR (Mean Time To Restore/Recovery)

Having incidents? Time to play:

Declare incidents "resolved" the moment service is partially restored

Apply bandage fixes to stop the bleeding, mark as resolved, then reopen as a "new" incident when it inevitably breaks again

Redefine what constitutes an "incident" mid-quarter

Simply don't log minor incidents at all

(On a more serious note: Rethinking MTTR)

CFR (Change Failure Rate)

Management wants this number low, but actually improving it would require thoughtful processes and investment in infrastructure. Who has time for that?

Deploy trivial changes to inflate your total deployment count (denominator magic!) especially after a period with higher CFR

Roll multiple risky changes into one deployment – one failure for the price of five!

The senior play: Introduce a "pre-production validation" stage where failures happen but aren't tracked

The Deeper Problem

The fundamental flaw in developer metrics is context. Context is not just missing; it's actively avoided because it complicates the neat narrative of "numbers go up = good."

"But we need metrics to improve!"

Fair enough. If you're starting from a terrible place, measuring can help. The problem emerges when you reach a good state. What then?

Let's say your DORA metrics all reach "elite" status. What happens next? Do you keep optimizing deployment frequency until you're deploying every millisecond? Do you reduce MTTR to negative values, somehow fixing issues before they occur?

There's a natural ceiling to these metrics, and once you approach it, the only way to "improve" is to game them. And engineers, being the optimization-obsessed creatures we are, can't help but fixate on improving these numbers, especially when they're directly tied to promotions and performance reviews.

It's like using a speedometer as a target rather than a measurement. "I must drive faster each quarter to show improvement!" No, it is an operational indicator, not a goal in itself.

Alternatives have been proposed. The SPACE framework, DX Core 4™ (yes: ™, think about it for a second), and others attempt to address these issues by measuring the entire development chain with multiple factors like satisfaction and wellbeing. But if management remains obsessed with improvements on action metrics, those metrics will be incorporated too, and we've come full circle.

The Inevitable Innovation Slowdown

Here's another inconvenient truth: Sometimes improving requires getting worse first.

Implementing a new architecture? Expect every metric to tank temporarily. Refactoring a critical system? Your velocity will drop. Training the team on new practices? Productivity will suffer before it improves.

But if leadership is obsessively watching those metrics dashboards, they'll panic at the first sign of numbers trending down. Innovation requires patience that quarterly metric reviews don't allow.

I'm not suggesting we abandon any metrics entirely. I'm suggesting we recognize them for what they are: imperfect proxies that provide hints, not definitive judgments of value creation.

Some humble suggestions:

Use metrics as conversation starters, not conversation enders

Recognize that context matters more than absolute numbers

Accept that experts value often shows up in other people's or business metrics indirectly

Understand that not everything valuable can be measured

Be suspicious when metrics improve too quickly

Or you could ignore all this and continue the metrics game. After all, you now have a playbook to win it.